Is AI-generated disinformation a threat to democracy?

An essay on the future of generative AI on social media

We just published an essay titled How to Prepare for the Deluge of Generative AI on Social Media on the Knight First Amendment Institute website. We offer a grounded analysis of what we can do to reduce the harms of generative AI while retaining many of the benefits. This post is a brief summary of the essay. Read the full essay here.

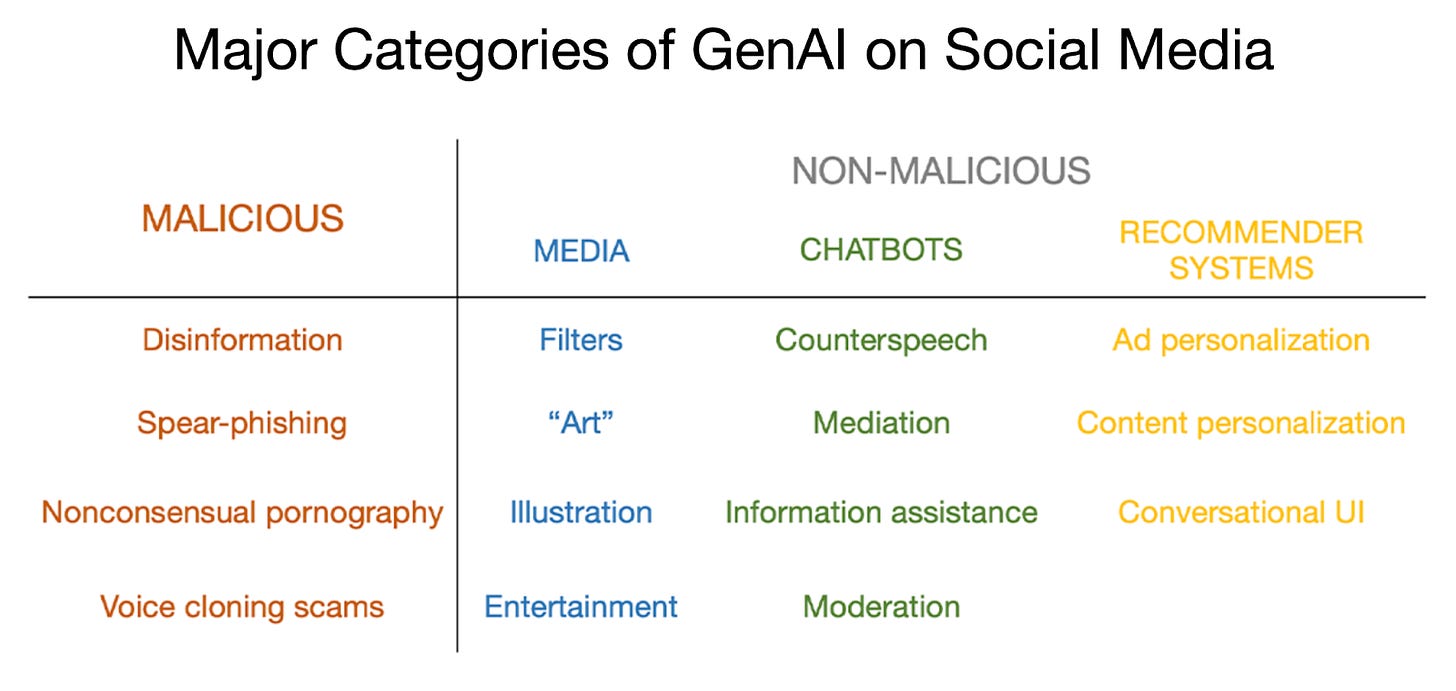

Most conversations about the impact of generative AI on social media focus on disinformation.

Disinformation is a serious problem. But we don’t think generative AI has made it qualitatively different. Our main observation is that when it comes to disinfo, generative AI merely gives bad actors a cost reduction, not new capabilities. In fact, the bottleneck has always been distributing disinformation, not generating it, and AI hasn’t changed that.

So, AI-specific solutions to disinformation, such as watermarking, provenance, and detection of AI-generated content, are barking up the wrong tree. They won’t work, and they solve a non-problem. Instead, we should bolster existing defenses such as fact-checking.

Furthermore, the outsized focus on disinformation means that many urgent problems aren’t getting enough attention, such as nonconsensual deepfake pornography. We offer a four-factor test to help guide the attention of civil society and policy makers to prioritize among various malicious uses.

Beyond malicious uses, many other applications of generative AI are benign, or even useful. While it is important to counteract malicious uses and hold AI companies accountable, an overt focus on malicious uses can leave researchers and public-interest technologists playing Whac-a-mole when new applications are released, instead of proactively steering the uses of generative AI in socially beneficial directions.

With this in mind, we analyze many types of non-malicious synthetic media, describe the idea of pro-social chatbots, and discuss how platforms might incorporate gen AI into their recommendation engines. Note that non-malicious doesn’t mean harmless. A good example is filters on apps like Instagram and TikTok, which have contributed to unrealistic beauty standards and body-image issues in teenagers.

Our essay is the result of many discussions with researchers, platform companies, public-interest organizations, and policy makers. It is aimed at all these groups. We hope to spur research into understudied applications, as well as the development of guardrails for non-malicious yet potentially harmful applications of generative AI.

Putting "ad personalization" in the positives section certainly is a choice.

"In fact, the bottleneck has always been distributing disinformation, not generating it, and AI hasn’t changed that." Nonsensical statement. Cambridge Analytica being able to even very crudely personalize disinfo at scale just at the cohort level was a big part of how Trump was able to win in 2016. Generative AI exponentializes that capability closer to one-to-one personalization that can also interact with each recipient ongoing.

"It’s true that generative AI reduces the cost of malicious uses. But in many of the most prominently discussed domains, it hasn't led to a novel set of malicious capabilities." No, it's not at all just cost reduction—it's a step change, even just in the one example area I described above.

Why are you guys are working so hard to sweep serious legitimate concerns under the rug way so quickly and with such faulty logic?

"Finally, note that media accounts and popular perceptions of the effectiveness of social media disinformation at persuading voters tend to be exaggerated. For example, Russian influence operations during the 2016 U.S. elections are often cited as an example of election interference. But studies have not detected a meaningful effect of Russian social media disinformation accounts on attitudes, polarization, or voting behavior." Misdirective argument. Did you entirely forget about Cambridge Analytica—or are you just hoping your readers have? Or do you just think the billions of dollars spent on political propaganda—both within the law and outside of it—is done just for the hell of it?

AI Snake Oil, indeed.